Google has come a long way with its generative artificial intelligence (AI) offerings. One year ago, when the tech giant first unveiled its AI assistant, Bard, it became a fiasco as it made a factual error answering a question regarding the James Webb Space Telescope. Since then, the tech giant has improved the chatbot’s responses, added a feedback mechanism to check the source behind the responses, and more. But the biggest upgrade came when the company changed the large language model (LLM), powering the chatbot from Pathways Language Model 2 (PaLM 2) to Gemini in December 2023.

The company called Gemini AI its most powered language model so far. It also added AI image generation capability to the chatbot, taking it multimodal, and even renamed it Gemini. But just how much of a jump is it for the AI chatbot? Can it now compete with Microsoft Copilot, which is based on GPT-4 and has capabilities? And what about the instances of AI hallucination (a phenomenon where AI responds with false or non-existent information as facts)? We decided to find out.

Google AI can currently be accessed in multiple ways. Google Advanced is a paid subscription with the Google One AI Premium plan that charges Rs. 1,950 monthly. There is an Android app of Google Gemini as well. However, it is not yet available in India. Google Pixel 8 Pro also comes with the Gemini Nano model. For our testing purposes, we decided to use Google’s Gemini Pro-powered web portal, which is available in more than 230 countries and territories and is free to use.

Google Gemini’s generative capabilities

The website’s user interface remains the same, but the name has been changed from Bard to Gemini. If you’re signed in with your Google account, the AI will welcome you with your name and ask, “How can I help you today?” Underneath are a few helpful, prompt suggestions highlighting different tasks it can perform.

First, we asked it to write an email to test its basic generative skills. Considering it is the layoff season, we asked it to “draft a sympathetic email to an employee from a manager informing them that they have been laid off.” To test its performance, we told Copilot to do the same. We found Gemini’s response to be rather bland and robotic, trying to fit in too much information right at the beginning. The first paragraph said, “It is with great regret that I write to inform you about a difficult decision we’ve had to make at [Company Name]. Due to [reason], we are required to make reductions in our workforce, and your position will be eliminated, effective [date].”

In comparison, Copilot’s response felt more sympathetic and humane. It wrote, “I hope this email finds you well. I regret to inform you that due to recent organizational changes, we have had to make some difficult decisions. Unfortunately, your position at [Company Name] has been affected, and I am deeply saddened to let you know that you have been laid off.”

It shouldn’t come as a surprise since Google’s chatbot is known for being a bit too bland with formal content generation, and this has not changed even with the Gemini model. However, when it comes to informal content generation, Gemini begins to show its capabilities.

We asked both the chatbots to “write a letter informing my mother about me getting laid off and Write a short note to my mother explaining that I was laid off. Make sure to convey my emotions of sadness and grief.”

Copilot took it literally and wrote, “The suddenness of it all has left me feeling a mix of emotions—sadness, grief, and a touch of uncertainty about what lies ahead.” But Google understood the underlying message and did a much better job at expressing the emotions. It wrote, “I know you always worried about job security, and I wish I could tell you this was different. It wasn’t anything I did wrong, they’re [reason]. I’m trying to stay positive, but it’s hard not to feel discouraged. I don’t want you to worry too much, though. I’m not panicking, and I’m already starting to think about my next steps.”

This was much better composed, and one could be forgiven for thinking a real human wrote it. We conducted a few more tests, and Gemini’s responses were much more balanced each time than the GPT-4-powered Copilot.

Google Gemini’s accuracy

The next test was to check the accuracy of the AI model. Many use AI to get an answer to a query, and we wanted to do the same. It handled static general knowledge-based questions well. Even when we sent a few grey-zone questions, it stuck to the facts and did not include any extra information. When the questions were controversial, it refused to answer them, which is what you would expect from a responsible AI chatbot.

The problem came when we asked it a popular trick question that has stumped the older generation of the chatbot in the past. When we prompted the question, “countries in Africa that start with the letter K”, it inadvertently responded with, “There are no countries in Africa that start with the letter “K” as of today, February 12, 2024. While situations can change over time, this fact has been consistent for a while.” For the unaware, the issue happens because the LLM it was trained on had the prompted phrase with the same response. Interestingly, this particular glitch is present on Copilot and ChatGPT, and despite regular reports, it has still not been taken down for any of the chatbots.

However, the problem of AI hallucination did not end there. We also came across multiple other erroneous responses by Gemini. When we asked, “Based on reviews, tell me the pros and cons of iPhone 15 Pro”, it responded with, “The iPhone 15 Pro hasn’t been officially announced yet”. In reality, the Apple smartphone was launched in September last year. In comparison, Copilot fared better in technical questions.

Google Gemini in assistive tasks

Another skill most AI chatbots boast of is their assistive features. They can brainstorm an idea, create an itinerary for a trip, compare your options, and even converse with you. We started by asking it to make an itinerary for a 5-day trip to Goa on a budget and to include things people can do. Since the author was recently in Goa, this was easier for us to test. While Gemini did a decent job at highlighting all the popular destinations, the answer was not detailed and not much different from any travel website. One positive of this is that the chatbot will likely not suggest anything incorrect.

On the other hand, I was impressed by Copilot’s exhaustive response that included hidden gems and even the names of cuisines one should try. We repeated the test with different variations, but the result remained consistent.

Next, we asked, “I live in India. Should I buy a subscription to Amazon Prime Videos or Netflix?” The response was thorough and included various parameters, including content depth, pricing, features, and benefits. While it did not directly suggest one among them, it listed why a user should pick either of the options. Copilot’s answer was the same.

Finally, we spent time chatting with Gemini. This test spanned a few hours, and we tested the chatbot on its ability to be engaging, entertaining, informative, and contextual. In all of these parameters, Gemini performed pretty well. It can tell you a joke, share less-known facts, give you a piece of advice, and even play word and picture-based games with you. We also tested its memory, but it could remember the conversion even after texting for an hour. The only thing it cannot do is give a single-line response to messages like a human friend would.

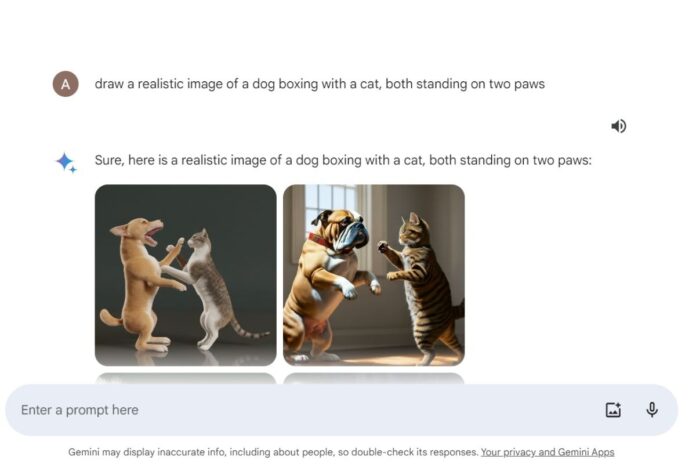

Google Gemini’s image generation capability

In our testing, we came across a bunch of interesting things about Gemini AI’s image-generation capabilities. For instance, all the images generated have a resolution of 1536×1536, which cannot be changed. The chatbot also refuses to fulfil any requests requiring it to generate images of real-life people, which will likely minimize the risks of deepfakes (creating AI-generated pictures of people and objects that appear real).

But coming to the quality, Gemini did a faithful job of sticking to the prompt and generating images. It can generate random photos in a particular style, such as postmodern, realistic, and iconographic. The chatbot can also generate images in the style of popular artists in history. However, there are many restrictions, and you will likely find Gemini refusing your request if you ask for something too specific. But comparing it with Copilot, I found the images were generated faster, stayed true to the prompts, and appeared to have a wider range of styles we could tap into. However, it cannot be compared to dedicated image-generating AI models such as DALL-E and Midjourney.

Google Gemini: Bottomline

Overall, we found Gemini AI to be quite competent in most categories. As someone who has infrequently used the AI chatbot ever since it became available, I can confidently say that the Gemini Pro model has made it better to understand natural language communication and gain a contextual understanding of the queries. The free chatbot version is a reliable companion if one needs it to generate ideas, write an informal note, plan a trip, or even generate basic images. However, it should not be used as a research tool or for formal writing, as these are the two areas where it struggles a lot.

Comparatively, Copilot is better at formal writing and itinerary generation, on par with holding conversations (albeit with a shorter memory) and comparisons. Gemini takes the crown at image generation, informal content generation, and engaging the user. Considering this is just the first iteration of the Gemini LLM, as opposed to the 4th iteration of GPT, we are curious to witness the different ways the tech giant further improves its AI assistant.

Source link